Earth Observation & GBIF occurrences

This example:

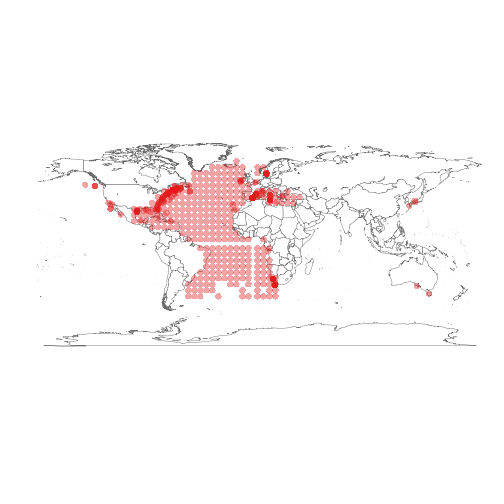

- Queries the Global Biodiversity Information Facility using the rOpenSci rgbif package for the Atlantic bluefin tuna (Thunnus thynnus) occurrences

- Uses the DBSCAN clustering algorithm to identify geospatial clusters

- Uses those clusters to query the European Space Agency Envisat MERIS Full Resolution Level 1 OpenSearch catalogue to get data about the Chlorophyll, suspended sediment and red tides of those clusters

- Selects the MERIS products covering at least 80% of the first identified cluster

Load the required packages with:

library("devtools")

library("rgbif")

library("fpc")

library("httr")

library("stringr")

library("XML")

library("RCurl")

library("sp")

library("rgeos")

library("maps")

library("RColorBrewer")

library("rOpenSearch")

library("rgbif")

library("knitr")

library("rworldmap")

Getting the Atlantic bluefin tuna (Thunnus thynnus) occurrences from GBIF with rgbif

# get the occurrences from GBIF using rgbif

key <- name_backbone(name='Thunnus thynnus', kingdom='animalia')$speciesKey

occurrences <- occ_search(taxonKey=key, limit=1000, return='data', hasCoordinate=TRUE)

occurrences <- occurrences[complete.cases(occurrences),]

This create the data frame occ with 592 entries. The first five entries look like:

| name | key | decimalLatitude | decimalLongitude |

|---|---|---|---|

| Thunnus thynnus | 923568366 | 28.07 | -17.348 |

| Thunnus thynnus | 857013819 | 18.73 | -68.449 |

| Thunnus thynnus | 857014199 | 36.02 | 14.280 |

| Thunnus thynnus | 857014239 | 36.08 | 14.236 |

| Thunnus thynnus | 857007848 | 41.88 | 3.198 |

The occurrences can be plotted to create the map:

Identifying geographical cluster of the occurrences

Density-based spatial clustering of applications with noise (DBSCAN) is a data clustering algorithm proposed by Martin Ester, Hans-Peter Kriegel, Jörg Sander and Xiaowei Xu in 1996. It is a density-based clustering algorithm because it finds a number of clusters starting from the estimated density distribution of corresponding nodes. DBSCAN is one of the most common clustering algorithms and also most cited in scientific literature.

DBSCAN requires two parameters: ε (eps) and the minimum number of points required to form a dense region[a] (minPts). It starts with an arbitrary starting point that has not been visited. This point's ε-neighborhood is retrieved, and if it contains sufficiently many points, a cluster is started. Otherwise, the point is labeled as noise. Note that this point might later be found in a sufficiently sized ε-environment of a different point and hence be made part of a cluster. If a point is found to be a dense part of a cluster, its ε-neighborhood is also part of that cluster. Hence, all points that are found within the ε-neighborhood are added, as is their own ε-neighborhood when they are also dense. This process continues until the density-connected cluster is completely found. Then, a new unvisited point is retrieved and processed, leading to the discovery of a further cluster or noise.

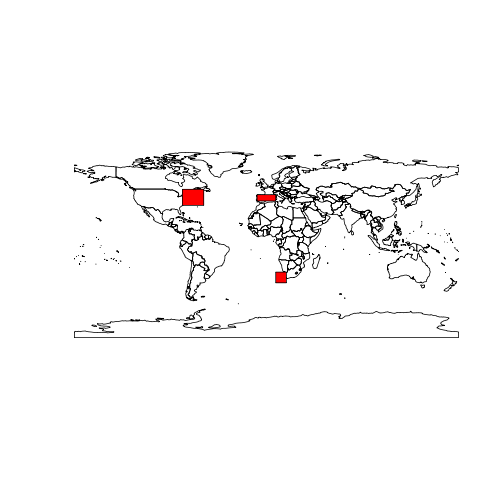

We will apply the DBSCAN clustering algorithm with ε value set to 5 and minPts to 20 to identify representative occurence geospatial clusters.

occurrences <- cbind(occurrences$decimalLongitude, occurrences$decimalLatitude)

eps <- 5

minpts <- 20

dbscan.res <- dbscan(occurrences, eps=eps, MinPts=minpts)

The DBSCAN algorithm identified 3 geographical clusters.

For each cluster the minimum bounding box is identified and plot.

mbr <- list()

plot(getMap())

#extract the minimum bounding box for each cluster

for(i in 1:max(dbscan.res$cluster)) {

# get the cluster

cl <- (occurrences[dbscan.res$cluster %in% i,])

# create the matrix with the cluster minimum bounding box

coords <- matrix(nrow=5, ncol=2, byrow=TRUE, data=c(

min(cl[,1]), min(cl[,2]),

max(cl[,1]), min(cl[,2]),

max(cl[,1]), max(cl[,2]),

min(cl[,1]), max(cl[,2]),

min(cl[,1]), min(cl[,2])))

# get the cluster geospatial envelope

if (gArea(gEnvelope(SpatialPoints(coords)))>0) mbr[[length(mbr)+1]] <- gEnvelope(SpatialPoints(coords))

plot(mbr[[length(mbr)]], add=TRUE, col="red")

}

Querying Envisat MERIS Level 1 data over a cluster

ESA hosts an OpenSearch catalogue available at: http://grid-eo-catalog.esrin.esa.int/catalogue/gpod/rdf

The MERIS Level 1 Full Resolution OpenSearch document is available at: http://grid-eo-catalog.esrin.esa.int/catalogue/gpod/MER_FRS_1P/description

# set the catalogue and retrieve the queryables

osd.description <- 'http://grid-eo-catalog.esrin.esa.int/catalogue/gpod/MER_FRS_1P/description'

response.type <- "application/rdf+xml"

q <- GetOSQueryables(osd.description, response.type)

q is a data frame containing all the queryables to specify a query to the catalogue:

| type | param | value |

|---|---|---|

| ws:type | resourcetype | NA |

| ws:protocol | protocol | NA |

| ws:ce | ce | NA |

| count | count | NA |

| startPage | startPage | NA |

| startIndex | startIndex | NA |

| sru:sortKeys | sort | NA |

| searchTerms | q | NA |

| time:start | start | NA |

| time:end | stop | NA |

| dct:modified | ingested | NA |

| geo:box | bbox | NA |

| geo:geometry | geometry | NA |

| geo:uid | uid | NA |

| eop:processingCenter | processingCenter | NA |

| eop:processorVersion | processorVersion | NA |

| eop:acquisitionStation | acquisitionStation | NA |

| eop:size | size | NA |

| eop:orbitNumber | orbitNumber | NA |

| eop:trackNumber | trackNumber | NA |

Let's set the count field type value to 200:

q$value[q$type == "count"] <- count

set out time of interest by setting the value of the field types time:start, time:end with:

q$value[q$type == "time:start"] <- "2006-01-01"

q$value[q$type == "time:end"] <- "2006-12-31"

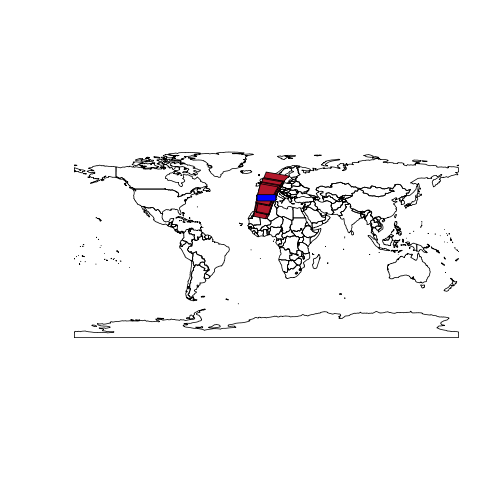

and set the geo-spatial queryable to the Well-Known Text value of the first cluster identified:

q$value[q$type == "geo:geometry"] <- writeWKT(mbr[[1]])

Our queryables data frame q is now updated with (removing NAs for readability):

| type | param | value | |

|---|---|---|---|

| 4 | count | count | 200 |

| 9 | time:start | start | 2006-01-01 |

| 10 | time:end | stop | 2006-12-31 |

| 13 | geo:geometry | geometry | POLYGON ((-8.7500000000000000 37.8689400000000020, -8.7500000000000000 43.7500000000000000, 8.7500000000000000 43.7500000000000000, 8.7500000000000000 37.8689400000000020, -8.7500000000000000 37.8689400000000020)) |

Let's query the catalogue and apply an xpath expression to retrieve the datasets as a data frame:

res <- Query(osd.description, response.type, q)

From the result, get the Datasets

dataset <- xmlToDataFrame(nodes = getNodeSet(xmlParse(res), "//dclite4g:DataSet"), stringsAsFactors = FALSE)

Let's inspect the first datasets (showing only the identifier column):

| MER_FRS_1PPEPA20061231_104209_000005122054_00180_25284_6705.N1 |

| MER_FRS_1PPEPA20061231_104158_000003352054_00180_25284_6703.N1 |

| MER_FRS_1PPEPA20061231_104146_000003602054_00180_25284_6708.N1 |

| MER_FRS_1PPEPA20061230_111346_000005122054_00166_25270_6672.N1 |

| MER_FRS_1PPEPA20061230_111336_000002592054_00166_25270_6671.N1 |

We now create a SpatialPolygonsDataFrame:

poly.sp <- SpatialPolygonsDataFrame(readWKT(data.frame(dataset$spatial)[1,]), dataset[1,])

for (n in 2:nrow(dataset)) {

poly.sp <- rbind(poly.sp, SpatialPolygonsDataFrame(readWKT(data.frame(dataset$spatial)[n,],id=n), dataset[n,]))

}

And check the coverage index of the MERIS products wrt the cluster minimum bounding box:

for (n in 1:nrow(dataset)) {

poly.sp[n,"coverage_index"] <- gArea(gIntersection(mbr[[1]], poly.sp[n,])) / gArea(mbr[[1]])

}

Select those with a coverage over 80%:

sp <- poly.sp[poly.sp$coverage_index > 0.8,]

Let's plot the 23 MERIS products that cover for at least 80% of the cluster area:

plot(getMap())

plot(sp, add=TRUE, col=brewer.pal(8 , "RdBu")[1])

plot(mbr[[1]], add=TRUE, col="blue")

Going further

The analysis could be repeated with the other clusters to identify what is the best cluster to work with MERIS data.